Once again, my thoughts are turning into reality.

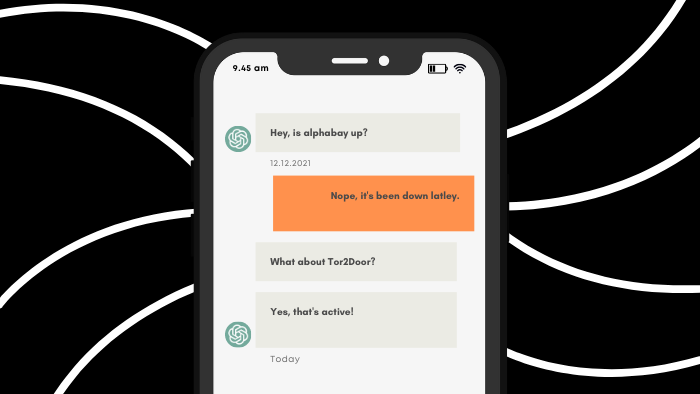

When I came across ChatGPT and its various prompts, a thought immediately crossed my mind: Is there going to be a ChatGPT for dark web?

At the beginning of May, a friend of mine sent me a tweet where I saw it really happening. A new language model known as DarkBERT is getting trained on the dark web.

In this blog post, I’ll show you the true potential of DarkBERT. So without any further ado, let’s begin!

How was DarkBERT Created?

The creators of DarkBERT started by gathering a bunch of web pages as raw material to create a text corpus.

They carefully filtered and sorted through this raw text using text preprocessing methods in order to prepare it for DarkBERT’s pre-training.

Finally, they trained DarkBERT using this text corpus that they’ve collected and preprocessed.

Uses of DarkBert

1. Dark Web Activity Classification: DarkBERT can be used to classify different types of activities on the Dark Web such as illicit drug sales, weapons trading, and other illegal activities. The model is trained to analyze the specific language used on the Dark Web and can be used to assess the level of risk associated with specific activities.

2. Ransomware and Leak Site Detection: One of the main uses of DarkBERT is to identify ransomware and leak sites on the Dark Web. This is an important task for law enforcement agencies and cybersecurity firms as they attempt to monitor and prevent criminal activities. By analyzing the language used in a website, DarkBERT can distinguish whether it is a genuine or malicious site.

3. Noteworthy Thread Detection: DarkBERT can also be used to detect noteworthy threads on the Dark Web. These threads may contain important information about illegal activities, cybercriminals or other topics of interest. By analyzing the language of these threads, DarkBERT can help researchers identify them more quickly.

4. Threat Keyword Inference: DarkBERT can also be used to identify threat keywords and make inferences about the potential danger of specific phrases and words. This can be helpful for security researchers and law enforcement agencies as they attempt to identify potential threats or malicious actors on the Dark Web.

Overall, DarkBERT is a powerful tool for analyzing Dark Web content and improving our understanding of this often-hidden corner of the internet. By analyzing language use and identifying patterns, the model can assist law enforcement agencies, cybersecurity firms and other researchers in identifying potential criminal activity and threats.

What Are the Limitations of DarkBERT?

Although DarkBert consists of many capabilities, there are a few limitations that you should know:

- DarkBERT’s training data is primarily in English due to the vast majority of the Dark Web’s texts being in English

- There is a limited amount of pretraining corpus data available in non-English languages leading to building a multilingual model challenging

- Downstream task evaluations may become difficult as they require high-quality annotations of task-specific datasets in multiple languages

- DarkBERT may not be optimal for non-English tasks and further pretraining using languages specific data may be necessary to leverage DarkBERT’s full potential

- Some tasks may require further fine-tuning using task-specific data, as seen in the Ransomware Leak Site Detection and Noteworthy Thread Detection use case scenarios

- There is a shortage of publicly available Dark Web task-specific datasets, which may require researchers to manually annotate or create new datasets to fine-tune DarkBERT

What Safety Measures Did DarkBERT’s Creators Take?

The creators of DarkBERT understand that there will be consequences of using such a powerful language model. Therefore, they did their best to come up with some ethical considerations. Here are some of them:

1. Crawling the Dark Web: The creators of DarkBERT ensured that they won’t expose themselves to content that should not be accessed, such as child pornography. Their automated web crawler removes non-text media and only collects raw text data to avoid any exposure to potentially illegal and sensitive media.

2. Sensitive Information Masking: To prevent DarkBERT from learning representations from sensitive information, such as email addresses or phone numbers, the researchers mask their data before feeding it to the model. They plan to release only the preprocessed version of DarkBERT publicly to avoid any malpractices. Through extensive testing, they observe that it is infeasible to infer any private or sensitive data using the preprocessed version of DarkBERT.

3. Annotator Ethics: The researchers recruited researchers from a cyber threat intelligence company to assist with the annotation process. To ensure a fair annotation process, both annotators handled the same set of thread data and were given equal compensation.

4. Use of Public Dark Web Datasets: Dark Web datasets, such as DUTA and CoDA, are available upon request for academic research purposes only. The researchers of DarkBERT adhere to this guideline and only use these datasets in the context of their research. They don’t plan to publicly release the Dark Web text corpus used for pretraining DarkBERT due to the sensitive nature of the Dark Web domain.

Who is Behind the DarkBERT?

The development of DarkBERT was supported by a grant from the Institute of Information & Communications Technology Planning and Evaluation (IITP), which is funded by the Korean government (MSIT). The grant number is No. 2022-0-00740, which was for the development of Dark Web Hidden Service Identification and Real IP Trace Technology.

What’s the Future of DarkBERT?

In the future, the creator plans to improve the performance of Dark Web domain-specific pre-trained language models using more recent architectures.

This effort will include crawling additional data to enable the creation of a multilingual language model. With further development, DarkBERT’s capabilities may be expanded, making it an even more powerful tool for analyzing Dark Web content.